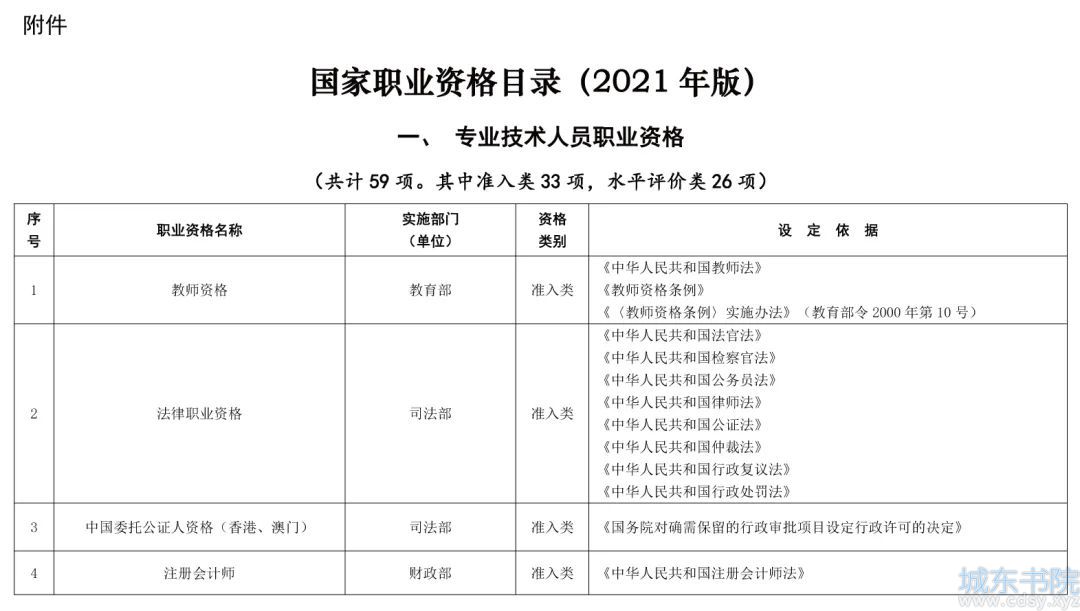

限流算法实现解读

时间:12-14来源:作者:点击数:

漏桶算法

漏桶(Leaky Bucket)算法,水(请求)先进入到漏桶里,漏桶以一定的速度出水(接口有响应速率),当流入速度过大时,会直接溢出(访问频率超过接口响应速率),然后就拒绝请求,而当入小于出的情况下,漏桶不起任何作用。可以看出漏桶算法能强行限制数据的传输速率。示意图如下:

注意:在实际应用中,漏桶算法强制限定流量速率后,多出的(溢出的 excess)流量可以被利用起来,并非完全丢弃,我们可以把它收集到一个队列(burst) 里面,做流量队列,尽量做到合理利用所有资源。

漏桶算法:水(请求)先进入到漏桶里,漏桶以一定的速度出水,当水流入速度过大会直接溢出(拒绝服务),可以看出漏桶算法能强行限制数据的传输速率

- 流入:以任意速率往桶中放入水滴。

- 流出:以固定速率从桶中流出水滴。

用白话具体说明:假设漏斗总支持并发100个最大请求,如果超过100个请求,那么会提示系统繁忙,请稍后再试,数据输出那可以设置1个线程池,处理线程数5个,每秒处理20个请求。

- 优点:无法击垮服务

- 缺点:因为当流出速度固定,大规模持续突发量,无法多余处理,浪费网络带宽

openresty/lua-resty-limit-traffic 源码解读

-- Copyright (C) Yichun Zhang (agentzh)

--

-- This library is an approximate Lua port of the standard ngx_limit_req

-- module.

local ffi = require "ffi"

local math = require "math"

local ngx_shared = ngx.shared

local ngx_now = ngx.now

local setmetatable = setmetatable

local ffi_cast = ffi.cast

local ffi_str = ffi.string

local abs = math.abs

local tonumber = tonumber

local type = type

local assert = assert

local max = math.max

-- TODO: we could avoid the tricky FFI cdata when lua_shared_dict supports

-- hash-typed values as in redis.

ffi.cdef[[

struct lua_resty_limit_req_rec {

unsigned long excess;

uint64_t last; /* time in milliseconds */

/* integer value, 1 corresponds to 0.001 r/s */

};

]]

local const_rec_ptr_type = ffi.typeof("const struct lua_resty_limit_req_rec*")

local rec_size = ffi.sizeof("struct lua_resty_limit_req_rec")

-- we can share the cdata here since we only need it temporarily for

-- serialization inside the shared dict:

local rec_cdata = ffi.new("struct lua_resty_limit_req_rec")

local _M = {

_VERSION = '0.07'

}

local mt = {

__index = _M

}

function _M.new(dict_name, rate, burst)

local dict = ngx_shared[dict_name]

if not dict then

return nil, "shared dict not found"

end

assert(rate > 0 and burst >= 0)

local self = {

dict = dict,

-- 这里 rate 和 burst 都乘了1000,实际上是为了方便计算出来的 excess 的小数,能够以一定精度(0.001)存进无符号长整形中 (struct lua_resty_limit_req_rec 中定义)

-- 常见类似业务处理,以整形存储金额,外部单位是元,内部乘1000转换为厘存,精度到厘,厘以下的就会丢失掉。

rate = rate * 1000,

burst = burst * 1000,

}

return setmetatable(self, mt)

end

-- sees an new incoming event

-- the "commit" argument controls whether should we record the event in shm.

-- FIXME we have a (small) race-condition window between dict:get() and

-- dict:set() across multiple nginx worker processes. The size of the

-- window is proportional to the number of workers.

function _M.incoming(self, key, commit)

local dict = self.dict

local rate = self.rate

local now = ngx_now() * 1000

local excess

-- it's important to anchor the string value for the read-only pointer

-- cdata:

local v = dict:get(key)

if v then

if type(v) ~= "string" or #v ~= rec_size then

return nil, "shdict abused by other users"

end

local rec = ffi_cast(const_rec_ptr_type, v)

local elapsed = now - tonumber(rec.last)

-- print("elapsed: ", elapsed, "ms")

-- we do not handle changing rate values specifically. the excess value

-- can get automatically adjusted by the following formula with new rate

-- values rather quickly anyway.

-- 公式:新水位 = max(老水位【rec.excess】 - 放水速率【rate】 * 放水时间【abs(elapsed) / 1000】 + 本次请求所占水位)

excess = max(tonumber(rec.excess) - rate * abs(elapsed) / 1000 + 1000,

0)

-- print("excess: ", excess)

if excess > self.burst then

return nil, "rejected"

end

else

excess = 0

end

if commit then

rec_cdata.excess = excess

rec_cdata.last = now

dict:set(key, ffi_str(rec_cdata, rec_size))

end

-- return the delay in seconds, as well as excess

return excess / rate, excess / 1000

end

return _M

漏桶算法和令牌桶算法,区别到底在哪里?

- 如果要让自己的系统不被打垮,用令牌桶。如果保证别人的系统不被打垮,用漏桶算法;

- 在“令牌桶算法”中,只要令牌桶中存在令牌,那么就允许突发地传输数据直到达到用户配置的门限,所以它适合于具有突发特性的流量。

openresty 漏桶算法验证

mkdir conf.d

创建 conf.d/nginx_limit.conf 文件,内容如下

lua_shared_dict my_limit_req_store 100m;

server {

listen 8700;

default_type text/html;

location / {

return 200 OK;

}

location /limit {

access_by_lua_block {

local limit_req = require "resty.limit.req"

-- 限制每秒 200 个请求 (rate),以及 100 的等待队列 (burst), 超过每次 300,直接拒绝

local rate = tonumber(ngx.var.arg_rate or 200)

local burst = tonumber(ngx.var.arg_burst or 100)

local lim, err = limit_req.new("my_limit_req_store", rate, burst)

if not lim then

ngx.log(ngx.ERR, "failed to instantiate a resty.limit.req object: ", err)

return ngx.exit(500)

end

-- 以远端IP为限制 key

local delay, excess = lim:incoming(ngx.var.binary_remote_addr, true)

if excess == "rejected" then

ngx.log(ngx.ERR, "rejected")

-- ngx.say, ngx.print 发送消息包,必然要先发送消息头,所以需要提前设置 response status (默认为200)

ngx.status = 503

ngx.say("XX delay:", delay, ", rate: ", rate, ", burst: ", burst)

return ngx.exit(503)

end

ngx.say("OK delay: ", delay, ", rate: ", rate, ", burst: ", burst, ", excess: ", excess)

return ngx.exit(200)

}

}

}

docker pull openresty/openresty:1.19.9.1-2-buster-fat

docker run -d -p 8700:8700 -v $(pwd)/conf.d:/etc/nginx/conf.d openresty/openresty:1.19.9.1-2-buster-fat

docker logs stoic_elbakyan 查看日志

使用 blow 压测

- blow ":8700/limit?rate=200&burst=10" -d 1m

- blow ":8700/limit?rate=200&burst=100" -d 1m

- blow ":8700/limit?rate=200&burst=100" -d 1m -c 2 --qps 100

- blow ":8700/limit?rate=200&burst=100" -d 1m -c 10 --qps 20

结果

QPS 设在 200 时,未触发限流(5xx)

方便获取更多学习、工作、生活信息请关注本站微信公众号

上一篇:垃圾回收的算法与实现 读书笔记

下一篇:LRU 和 ARC 自适应缓存替换算法

湘公网安备 43102202000103号

湘公网安备 43102202000103号