python查找指定目录(包含子目录)所有html文件中body标签里面的所有链接,并逐条写入xlsx表格里,并且每个链接都标明来自哪个html文件(完整路径)

时间:10-01来源:作者:点击数:

python查找指定目录(包含子目录)所有html文件中body标签里面的所有链接,并逐条写入xlsx表格里,并且每个链接都标明来自哪个html文件(完整路径)

import os

import re

from bs4 import BeautifulSoup

import openpyxl

from urllib.parse import urljoin, urlparse

import glob

def find_html_files(directory):

"""查找指定目录及其子目录中的所有HTML文件"""

html_files = []

for root, dirs, files in os.walk(directory):

for file in files:

if file.lower().endswith(('.html', '.htm')):

html_files.append(os.path.join(root, file))

return html_files

def extract_links_from_html(file_path):

"""从HTML文件中提取body标签内的所有链接"""

links = []

try:

# 尝试多种编码方式读取文件

encodings = ['utf-8', 'gbk', 'gb2312', 'iso-8859-1']

content = None

for encoding in encodings:

try:

with open(file_path, 'r', encoding=encoding) as file:

content = file.read()

break

except UnicodeDecodeError:

continue

if content is None:

print(f" 无法解码文件: {file_path}")

return links

soup = BeautifulSoup(content, 'html.parser')

body = soup.body

if body:

# 查找body内所有的链接元素

link_elements = body.find_all('a', href=True)

for link in link_elements:

href = link.get('href', '').strip()

if href and not href.startswith(('javascript:', 'mailto:', '#')):

# 清理链接文本

link_text = link.get_text(strip=True)

if not link_text:

link_text = "[无文本]"

# 构建绝对URL

absolute_url = urljoin(f"file://{os.path.abspath(file_path)}", href)

links.append({

'url': href,

'absolute_url': absolute_url,

'text': link_text,

'tag_name': link.name

})

except Exception as e:

print(f"处理文件 {file_path} 时出错: {e}")

return links

def save_links_to_xlsx(links_data, output_file):

"""将链接数据保存到XLSX文件"""

workbook = openpyxl.Workbook()

worksheet = workbook.active

worksheet.title = "HTML链接列表"

# 设置表头

headers = ['序号', 'HTML文件路径', '链接文本', '链接URL', '绝对URL', '标签类型']

for col, header in enumerate(headers, 1):

worksheet.cell(row=1, column=col, value=header)

# 设置列宽

worksheet.column_dimensions['A'].width = 8 # 序号

worksheet.column_dimensions['B'].width = 50 # HTML文件路径

worksheet.column_dimensions['C'].width = 30 # 链接文本

worksheet.column_dimensions['D'].width = 40 # 链接URL

worksheet.column_dimensions['E'].width = 50 # 绝对URL

worksheet.column_dimensions['F'].width = 12 # 标签类型

# 写入数据

row = 2

serial_number = 1

for file_path, links in links_data.items():

for link in links:

worksheet.cell(row=row, column=1, value=serial_number) # 序号

worksheet.cell(row=row, column=2, value=file_path) # HTML文件路径

worksheet.cell(row=row, column=3, value=link['text']) # 链接文本

worksheet.cell(row=row, column=4, value=link['url']) # 链接URL

worksheet.cell(row=row, column=5, value=link['absolute_url']) # 绝对URL

worksheet.cell(row=row, column=6, value=link['tag_name']) # 标签类型

row += 1

serial_number += 1

# 保存文件

workbook.save(output_file)

print(f"链接已保存到: {output_file}")

def main():

# 配置参数

directory = input("请输入要搜索的目录路径: ").strip().strip('"')

if not os.path.exists(directory):

print("指定的目录不存在!")

return

# 设置输出文件名

output_file = "html_links.xlsx"

print("正在搜索HTML文件...")

html_files = find_html_files(directory)

print(f"找到 {len(html_files)} 个HTML文件")

if not html_files:

print("未找到任何HTML文件!")

return

all_links = {}

total_links = 0

print("\n开始提取链接...")

# 处理每个HTML文件

for i, html_file in enumerate(html_files, 1):

print(f"处理文件中 ({i}/{len(html_files)}): {os.path.basename(html_file)}")

links = extract_links_from_html(html_file)

if links:

all_links[html_file] = links

total_links += len(links)

print(f" 找到 {len(links)} 个链接")

else:

print(f" 未找到链接")

# 保存到XLSX文件

if all_links:

save_links_to_xlsx(all_links, output_file)

print(f"\n完成!共找到 {total_links} 个链接,来自 {len(all_links)} 个HTML文件")

print(f"结果已保存到: {os.path.abspath(output_file)}")

# 显示统计信息

print("\n统计信息:")

for file_path, links in all_links.items():

print(f" {os.path.basename(file_path)}: {len(links)} 个链接")

else:

print("未找到任何链接")

if __name__ == "__main__":

main()

安装依赖

首先需要安装必要的库:

pip install beautifulsoup4 openpyxl

脚本特点

- 支持xlsx格式:使用openpyxl库生成更现代的Excel格式

- 多编码支持:自动尝试UTF-8、GBK等多种编码读取HTML文件

- 完整路径记录:每个链接都标明来源HTML文件的完整路径

- 链接过滤:跳过JavaScript、邮件和锚点链接

- 详细输出:包含处理进度和统计信息

- 优化格式:自动设置列宽,添加序号列

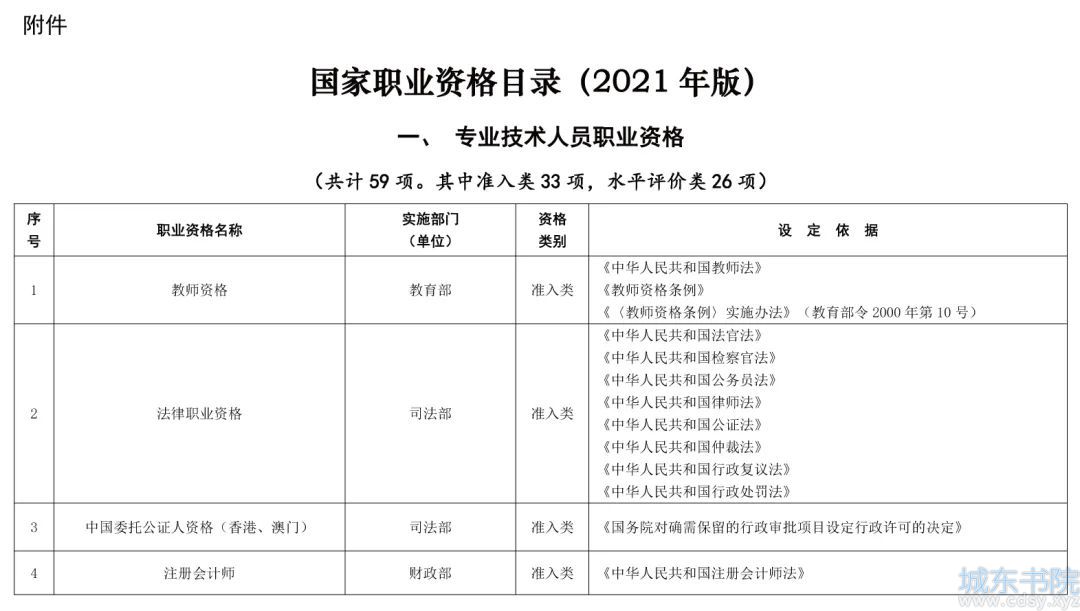

输出表格结构

生成的xlsx文件包含以下列:

- 序号:链接的编号

- HTML文件路径:来源HTML文件的完整路径

- 链接文本:链接的显示文本

- 链接URL:原始的链接地址

- 绝对URL:转换后的绝对路径

- 标签类型:HTML标签类型(通常是'a')

使用方法

- 运行脚本

- 输入要搜索的目录路径

- 等待处理完成

- 查看生成的html_links.xlsx文件

高级版本(支持更多链接类型)

如果还需要提取其他类型的链接(如图片、CSS、JS等),可以使用这个增强版本:

import os

from bs4 import BeautifulSoup

import openpyxl

from urllib.parse import urljoin

def extract_all_links_from_html(file_path):

"""提取HTML文件中body内的所有类型链接"""

links = []

try:

encodings = ['utf-8', 'gbk', 'gb2312', 'iso-8859-1']

content = None

for encoding in encodings:

try:

with open(file_path, 'r', encoding=encoding) as file:

content = file.read()

break

except UnicodeDecodeError:

continue

if content is None:

return links

soup = BeautifulSoup(content, 'html.parser')

body = soup.body

if not body:

return links

# 各种可能包含链接的标签

link_tags = [

('a', 'href', '超链接'),

('img', 'src', '图片'),

('link', 'href', '资源链接'),

('script', 'src', '脚本'),

('iframe', 'src', '内嵌框架')

]

for tag_name, attr, link_type in link_tags:

elements = body.find_all(tag_name, **{attr: True})

for element in elements:

url = element.get(attr, '').strip()

if url and not url.startswith(('javascript:', 'mailto:', '#')):

link_text = element.get_text(strip=True)

if not link_text:

link_text = "[无文本]"

absolute_url = urljoin(f"file://{os.path.abspath(file_path)}", url)

links.append({

'url': url,

'absolute_url': absolute_url,

'text': link_text,

'tag_name': tag_name,

'link_type': link_type

})

except Exception as e:

print(f"处理文件 {file_path} 时出错: {e}")

return links

这个增强版本可以提取更多类型的链接资源。

方便获取更多学习、工作、生活信息请关注本站微信公众号

推荐内容

相关内容

栏目更新

栏目热门

本栏推荐

湘公网安备 43102202000103号

湘公网安备 43102202000103号